By mid‑2025, AI development tools will have graduated from optional aides to an integral part of the enterprise software development workflow, influencing everything from coding to full-stack agentic frameworks. Research and surveys have found that more than 70% of teams are now relying on AI to speed the delivery of software, and many are seeing productivity gains of 20% or more.

As businesses deploy AI at the scale of the SDLC, from requirements analysis to deployment, by 2025, developer productivity is rooted in agentic, multimodal, secure tooling. In this article, we spotlight the utilities and platforms that are leading that wave, their core functions, developer use cases, and the hints they offer for where enterprise AI development is headed next.

RAG Stack

RAG (Retrieval-Augmented Generation) has grown from an experimental workflow to a base architecture for enterprise AI systems. A RAG stack leverages vector search, embedding generation, and large language models to generate responses that are contextual and grounded in fact-checked information. Rather than exposing an LLM to hallucinating answers by *faithfully* responding under uncertainty, a RAG pipeline first extracts (relevant) internal or external documents and conditions on that context to the LLM to produce accurate generation.

Contemporary RAG stacks often comprise a vector database, such as Pinecone or Weaviate, an LLM orchestration layer (e.g., LangChain, LlamaIndex), and model endpoints, such as OpenAI, Anthropic, or Google. This modular approach allows developers to create enterprise‑safe chatbots, automated research agents, and domain‑specific copilots without exposing sensitive information to uncontrolled model outputs.

Key Features

- Combines retrieval with generation for higher factual accuracy.

- Supports hybrid search: semantic, keyword, or metadata‑driven.

- Modular setup with interchangeable vector DBs and LLMs.

- Improves compliance by keeping sensitive data under enterprise control.

Pros

- Reduces hallucinations and improves trustworthiness of AI outputs.

- Flexible architecture that scales across industries and data sources.

Cons

- Requires careful pipeline design to avoid latency in multi‑component systems.

- Quality depends heavily on data cleanliness and embedding strategy.

RAG stacks power many of the real‑world enterprise copilots in 2025. Cohere’s neural language model, CommandR+, is based on a rag-optimized process for question answering with grounded references, which makes it advantageous for legal and finance research. Morgan Stanley applied a RAG-driven knowledge assistant that securely retrieves internal wealth management documents, allowing compliance while improving the advisor's efficiency.

LangChain

LangChain is now a central framework for AI app development in 2025, enabling workflows that span multiple LLM calls. Originally developed to chain language model prompts, it has matured to support complex agentic pipelines where LLM systems consume databases, APIs, and multi‑modal inputs in a structured way. Businesses count on LangChain to transform large language models into production systems, from intelligent assistants to research bots.

Its 2025 iteration is geared up and hard-wired for integration and orchestration. Multiple specialized agents (e.g., data retrieval agent, reasoning agent) can be connected out-of-the-box by a developer, while still keeping control over memory, context, and response validation. Integrated with vectorized databases and retrieval stacks, LangChain is the backbone of many modern RAG systems.

Key Features

- Seamless integration with multiple LLMs and multi‑modal inputs.

- Built‑in support for vector databases and RAG pipelines.

- Agent orchestration for complex reasoning and workflow automation.

- Modular structure for production‑grade deployment in enterprise environments.

Pros

- Flexible and highly customizable for varied AI applications.

- Strong ecosystem and active developer community.

Cons

- Steeper learning curve for non‑expert developers.

- Requires careful optimization to control cost and latency in production.

LangChain already has real-world adoption at the enterprise level! Infor uses it to access artificial intelligence (AI) assistants in its InforOS system that automate multi‑cloud workflows in industries ranging from aerospace to healthcare. Vodafone has rolled out LangChain‑powered agents such as the Insight Engine, which translates natural language questions into SQL for quick‑fire performance analysis, and Enigma, which retrieves technical documentation to aid diagnosis. Meanwhile, Rakuten deployed an internal assistant to 32,000 employees in less than a week for domain-aware knowledge retrieval across the globe. These deployments demonstrate LangChain’s 2025 positioning as the basis for enterprise RAG pipelines, multi‑agent orchestration, and secure, high‑volume automation.

Pinecone

Pinecone has become the go-to vector database for enterprises that are building AI applications that need or rely on semantic search and retrieval‑augmented generation (RAG). What's different about Pinecone is its emphasis on performance and simplicity. Instead of burdening developers with managing complicated infrastructure or sharded similarity search setups, Pinecone provides serverless scaling, automatic indexing, and multi‑region support. This makes it particularly well‑suited for applications in which the pace and quality of responses can determine the user experience, e.g., personal search or real‑time recommendation systems.

Key Features

- Fully managed vector database with serverless auto‑scaling.

- Sub‑second query performance for billions of embeddings.

- Native integration with frameworks like LangChain, OpenAI, and RAG stacks.

- Multi‑region deployments with enterprise‑grade security and compliance.

Pros

- Highly reliable and optimized for production‑scale workloads.

- Simple API and SDKs make integration with AI pipelines fast.

Cons

- Proprietary cloud solution; self‑hosting is not an option.

- Costs can increase with extremely large vector volumes.

Pinecone sits at the center of AI stacks requiring real‑time, context‑rich outputs, such as chatbots that access proprietary knowledge while maintaining data isolation, or recommendation engines that respond instantly to behavior changes.

OpenRouter

OpenRouter has become a developer favorite in 2025 for routing AI traffic across multiple LLM providers without locking into a single ecosystem. In practice, it acts as a unified API layer that lets engineers tap into models from OpenAI, Anthropic, Google, Mistral, and local deployments—all while managing cost, latency, and compliance in one place. Instead of juggling credentials and separate SDKs, developers now use OpenRouter to compare model performance on the fly, optimize spending, and switch models seamlessly as project requirements evolve.

This flexibility has proven essential for multi-model applications. Teams can, for example, send short, low-risk queries to an inexpensive model for speed and cost savings, while routing complex, compliance-heavy requests to a more robust, enterprise-tier LLM. Paired with frameworks like LangChain or AutoGen, OpenRouter becomes a backbone for agentic, multi-model AI architectures that balance experimentation with production reliability.

Key Features

- Single API access to multiple commercial and open-source LLMs.

- Built-in cost, latency, and token usage tracking.

- Easy integration with orchestration tools like LangChain and AutoGen.

- Supports flexible routing policies for performance and compliance needs.

Pros

- Reduces vendor lock-in and increases flexibility in multi-model projects.

- Simplifies cost management with centralized usage analytics.

Cons

- Relies on third-party uptime and routing performance.

- Advanced routing features may require paid tiers or enterprise plans.

Startups and the enterprise R&D organizations depend on OpenRouter to iteratively prototype, benchmark, and deploy future-proof multi-model AI solutions that are not locked into one LLM vendor. AI research labs use it to A/B test model outputs across models for quality and efficiency from Anthropic’s Claude, OpenAI’s GPT, and Mistral’s Mixtral. At the same time, enterprise developers can use OpenRouter to route tasks with sensitive content to secure endpoints while maintaining cost efficiency in exploratory and low-priority tasks.

AutoGen (Microsoft)

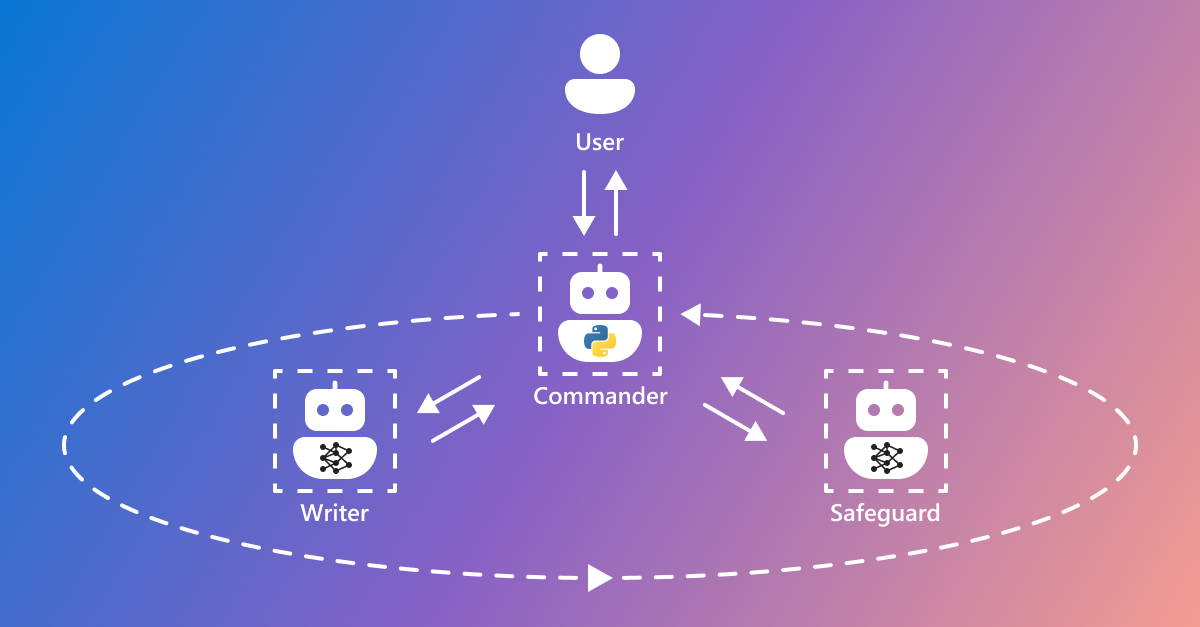

AutoGen enables the generation of collaborative AI systems made up of multiple LLM-powered agents that cooperate to achieve complex goals. Compared to single-assistant models, AutoGen facilitates developers to compose workflows of specialized agents (e.g., code reviewer, bug fixer, test writer, deployment orchestrator) that communicate with each other in a structured, automated loop.

AutoGen, as part of the Azure AI Studio and an enterprise-grade secure cloud backend, is especially appealing to those enterprises that are out there looking for scalable, secure, and orchestrated AI operations. With agent autonomy and human-in-the-loop controls, AutoGen creates a safe experimentation platform for enterprise AI, while maintaining compliance and reliability.

Key Features

- Framework for creating multi-agent LLM workflows with message passing.

- Native Azure integration for enterprise security and scaling.

- Human-in-the-loop options for oversight and approval.

- Built-in support for code execution, tool use, and agent orchestration.

Pros

- Reduces developer workload by automating multi-step, complex tasks.

- Enterprise-ready with robust security and Azure cloud support.

Cons

- Requires thoughtful design to prevent agent loops or redundant actions.

- Currently optimized for the Microsoft ecosystem; less flexible for non‑Azure stacks.

AutoGen delivers great results in software engineering and enterprise automation. Teams use it to automate end-to-end workflows, for example, generating new feature code, performing peer-like code reviews, and deploying test environments without manual intervention.

Claude Code

Claude Code, its developer‑centric version of the Claude 3. x family, has secured a premium position in 2025 AI development flows by focusing on context depth and safety. It is best with a deep context understanding. Developers may even upload an entire repository, long technical documents, or complex architecture diagrams, and Claude will keep a consistent reasoning across those inputs without forgetting about which dependency is what. With its science-based code review tone, it reduces the risk of insecure code recommendations—a top concern for enterprise adoption—and, when used alongside Constitutional AI principles, is one of the only AI coding tools that takes deliberate steps to prevent risky suggestions.

Key Features

- Handles massive context windows, supporting multi‑file or full‑repo analysis.

- Reasoning‑first approach with explainable code suggestions.

- Built‑in safety and compliance filters based on Constitutional AI.

- Native integration with Anthropic’s enterprise cloud and secure endpoints.

Pros

- Exceptional for large‑scale refactoring and compliance‑sensitive development.

- Lower risk of insecure code generation compared to general AI assistants.

Cons

- Slightly slower than lightweight AI code assistants for quick completions.

- Currently optimized for enterprise use, with fewer hobbyist‑friendly features.

Claude Code is widely adopted in finance, healthcare, and regulated SaaS environments where compliance and reasoning accuracy are paramount. Enterprise developers rely on it for full‑repo audits, architecture refactoring, and generating safe boilerplate for internal APIs. Its support is crucial for enterprise developers who need to do full‑repo audits, refactor architecture, and create safe boilerplate for internal APIs. Claude Code has been incorporated into code review pipelines at global consulting firms for identifying security concerns in new code and providing natural language explanations to human reviewers. For companies that prioritize safety and thorough thought over speed, Claude Code is the new favorite code buddy.

v0

Front-end developer’s AI co‑pilot in 2025, v0 by Vercel, helps by closing the loop between design and production. v0, in contrast to classic AI code helpers that are mostly backend or line-by-line based, is for UI and application prototyping. Developers can provide a wireframe, Figma design, or description in plain English, and v0 will build fully responsive, production‑ready components with React, Tailwind, and Vercel’s serverless stack. Its superpower is speed and iteration. A front‑end engineer will be able to move from concept to live prototype faster than ever before, thanks to AI‑generated parts that are editable, scalable and in line with contemporary design systems.

Key Features

- Converts designs, sketches, or prompts into production‑ready components.

- Generates code optimized for React, Tailwind, and Vercel hosting.

- Live preview and one‑click deployment to Vercel’s serverless platform.

- Supports iterative editing, allowing developers to refine AI‑generated UIs quickly.

Pros

- Massive time‑saver for front‑end MVPs and rapid iterations.

- Tight integration with modern front‑end stacks and deployment pipelines.

Cons

- Primarily focused on front-end/UI workflows, less useful for backend or logic-heavy apps.

- Generated components often require developer polishing to meet production standards.

Bolt.new

Bolt.new focuses on end‑to‑end app generation, from database setup and backend logic to front‑end scaffolding and deployment. Developers provide a short project description or set of functional requirements, and Bolt.new outputs a fully runnable application with clean, editable code.

Its core strength is speed and end‑to‑end coverage. A developer can generate a CRUD SaaS app, an e‑commerce prototype, or an internal dashboard and immediately deploy it to the cloud. Bolt.new also integrates AI‑driven iteration: after initial generation, developers can request changes conversationally, such as adding authentication, tweaking UI elements, or connecting to an external API.

Key Features

- Instant full‑stack app generation from a natural language prompt.

- Pre‑configured databases, backend logic, and front‑end scaffolding.

- Integrated AI iteration for feature changes and refinements.

- Cloud deployment support for one‑click hosting of generated apps.

Pros

- Massively accelerates MVP and proof‑of‑concept development.

- Produces editable, human‑readable code for future customization.

Cons

- Less suited for complex, highly customized production apps.

- Generated apps may require manual optimization for performance and security.

ChatGPT / Gemini

In 2025, OpenAI’s ChatGPT and Google DeepMind’s Gemini have matured out of having been general‑purpose conversational AIs and have become full‑fledged developer utilities capable of plugging in from code completion to full‑stack app prototyping. No longer just for chat, these models understand code, images, diagrams, and even live system logs, and slash the time it takes to solve even the most difficult problems.

ChatGPT Enterprise integrates directly into IDEs, cloud platforms, and internal knowledge bases, enabling teams to generate, debug, and document code with contextual awareness. Its Code Interpreter / Advanced Data Analysis mode now supports real‑time log parsing and on‑the‑fly script execution, making it a versatile partner for DevOps and data engineering workflows.

Gemini, on the other hand, relies on multi‑modal reasoning and deep integration with Google Cloud. It shines at applications such as explaining architectural diagrams, synthesising deployment scripts, and pairing LLM reasoning with search-enhanced data. Its Gemini Studio interface enables developers to prototype entire applications while connecting to Google’s APIs and enterprise services out of the box.

GitHub Copilot / Cursor / Codeium (AI Code Assistants)

GitHub Copilot continues to lead the way in AI-powered coding, with powerful integration into VS Code and JetBrains IDEs. The Copilot X upgrade introduced contextual chat, multi‑file comprehension, and inline documentation generation, making the platform a must‑have for solo developers and enterprise teams alike.

Cursor, a 2025 darling of startups and open‑source contributors, brings a more agentic perspective. This enables devs to inquire of their codebase as if it were a trusted colleague, do multi‑file editing, and run code analysis in‑editor, all serving to transform the editor into an embedded coding co‑pilot and debugger.

Codeium, meanwhile, sets itself apart with privacy‑first deployments and team‑focused features for companies that want on‑premise AI code assistance without shipping code to third‑party servers.

Outside of this list, dozens of new tools are stretching the limits of what AI‑assisted development can achieve. VectorDB options are also evolving, with Weaviate or Milvus, LlamaIndex or Haystack still (very) popular choices for custom RAG pipelines, and Replit Ghostwriter continues to open up AI coding to the less tightly coupled teams and hobbyists out there. Even dedicated tools like Cerebras SDK and Baseten suggest a future where AI‑augmented infrastructure and model‑as‑code practices are commonplace.

What this means for developers and businesses is clear: the era of AI‑native software development is upon us. The teams who learn to integrate human creativity with these AI‑powered tools will move faster, build smarter, and lead tomorrow’s generation of intelligent applications.

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Suspendisse varius enim in eros elementum tristique. Duis cursus, mi quis viverra ornare, eros dolor interdum nulla, ut commodo diam libero vitae erat. Aenean faucibus nibh et justo cursus id rutrum lorem imperdiet. Nunc ut sem vitae risus tristique posuere. uis cursus, mi quis viverra ornare, eros dolor interdum nulla, ut commodo diam libero vitae erat. Aenean faucibus nibh et justo cursus id rutrum lorem imperdiet. Nunc ut sem vitae risus tristique posuere.

Reply