AI is another piece of the enterprise tech stack, far removed from a distant R&D project and now a practical system component underpinning everything from customer service to forecasting models. But many product and technical leaders are in the dark about the process of building these systems.

This post explains things like what AI development actually is (from machine learning and deep learning to today’s LLMs, RAG pipelines, and vector databases). Whether you’re in a build-vs-buy phase, testing out new AI tech, or scoping your first use case, it’s the context to do it right.

What Is AI Development?

AI development is a way to design, train, and deploy software systems that exhibit the behavior traditionally mentioned as human: perception, reasoning, prediction, decision-making, and understanding language. Artificial intelligence is a catch-all term that more accurately describes a stack of technologies like a pyramid, each with its own function and implementation path.

Source: https://www.devprojournal.com/software-development-trends/devops/what-are-the-most-commonly-used-software-development-tools/

The Three-Layered Stack: AI, ML, and DL

- Artificial Intelligence (AI) is the supercategory. That includes any algorithm or system that imitates cognitive functions, whether it’s a rule-based expert system or a neural network that produces human-like text. AI is the umbrella under which everything else falls.

- ML is a subdomain of AI. ML systems are rule-free; rather, they learn patterns on the basis of data. Their performance is naturally and continuously improved by updating parameters according to statistical results. ML is the workhorse behind many of today’s AI systems, especially in classification, prediction, and recommender systems.

- Deep Learning (DL) is actually a pretty specific subdomain of ML. It depends on deep neural networks, a class of multi-layered neural networks used to capture complex patterns in high-dimensional data. DL has enabled significant progress in image identification, natural language processing, and generative modeling.

Think of AI as the goal, ML as the method, and DL as the scaling mechanism. Traditional ML methods (e.g., decision trees, SVMs) require feature engineering and perform well on structured data. Deep learning, by contrast, excels at modeling unstructured data (text, images, audio) at scale, reducing the need for manual feature extraction.

Applied AI vs. General AI

Another crucial difference: Applied AI is a name for the domain-specific, task-driven systems that are going into production now. Such systems are trained to accomplish specific tasks in tightly circumscribed domains—detecting fraud, summarizing contracts, forecasting demand—and they do so with measurable precision and business impact.

Artificial General Intelligence (AGI), the general AI, on the other hand, still exists as a conjecture. It’s a term for systems that exhibit human-level reasoning and learning abilities in a broad set of domains. AGI is outside the range of enterprise strategy, not because it’s not an interesting phenomenon, but because we can’t yet build it, and it’s not yet a useful commercial thing.

Source: https://www.vecteezy.com/vector-art/22400143-artificial-general-intelligence-linear-logo-minimalist-style-agi-icon-depicts-physics-and-technology-showcasing-ai-brain-powered-machine-learning-vector-eps-illustration

For business leaders, it’s time to focus on applied, production-ready AI. These systems are designed to coexist with your current solutions, work under constraints such as latency and privacy, and provide ROI through automation, augmentation, or optimization.

Key Technologies & Tools

The present AI stack is built as a stack of building blocks (each responsible for one layer of functionality: representation, retrieval, generation, and orchestration), useful for deployment into existing factory systems. Here is a summary of the core technologies every business leader should know.

Large Language Models (LLMs)

LLMs are deep learning models with large-scale text-based corpora for the purpose of knowledge generation, classification, and manipulation of language. Architecturally, they are based on transformer networks, an innovation that enables models to handle long-range dependencies in text and parallelize training at scale.

Models like GPT-5 (OpenAI), Claude (Anthropic), LLaMA (Meta), and Mistral are trained using self-supervised learning objectives, typically next-token prediction across billions of parameters and tokens. Training occurs on a distributed compute infrastructure, using techniques like mixed-precision training, gradient checkpointing, and reinforcement learning from human feedback (RLHF) to refine performance.

Retrieval-Augmented Generation (RAG)

RAG is a hybrid architecture that combines an LLM’s generative ability with an external retrieval system (typically, a vector database). Before generating a response, the model retrieves relevant information from a knowledge base (e.g., internal documents, policy PDFs, structured data), embeds it into the prompt, and only then produces an output.

Why it matters:

LLMs are limited by their training cutoff and don’t “know” proprietary data. RAG allows enterprises to build AI systems that are both current and domain-aware without retraining the base model.

Notable tools include LangChain, LlamaIndex, Weaviate, Pinecone, FAISS.

Vector Databases

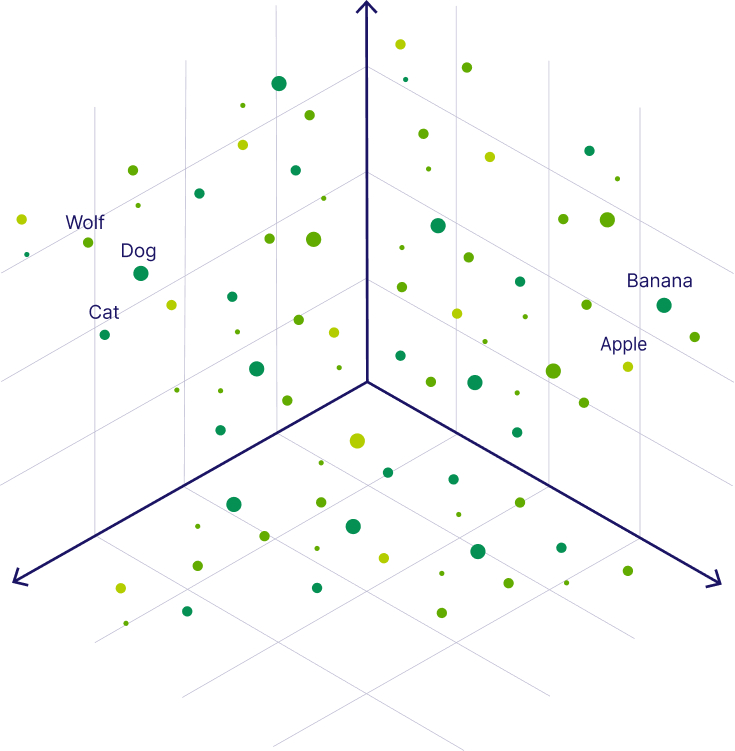

Vector databases are specialized systems used to hold and fetch high-dimensional embeddings: numeric representations of unstructured data like text, images,or audio. Rather than searching for exact keywords, they help AI systems find information based on meaning. For instance, if a user asks, “How do I cancel my plan?” the system can retrieve past support tickets that mention “ending a subscription” or “closing an account”—even if those exact words weren't used. Two pieces of content with “subscription cancellation” in them, even if the words are used differently in an exact word match, will exist close enough in the space that you’ll be able to retrieve them based on intent.

Source: https://weaviate.io/blog/what-is-a-vector-database

The main functionality of vector databases is their capability to handle semantic search at scale, even in low-delay use cases. This is done through the use of Approximate Nearest Neighbor (ANN) algorithms, such as HNSW or IVF, used to speed up similarity search within very large sets of vectors without making an exhaustive comparison. This makes vector DBs a critical backbone in RAG pipelines.

For enterprises, vector databases unlock intelligent access to internal knowledge silos, be they support tickets, legal documents, product specs, or conversation logs. Leading solutions like Pinecone, Weaviate, FAISS, and Qdrant offer APIs that integrate directly into AI stacks, often alongside orchestration frameworks like LangChain.

Prompt Engineering

Prompt engineering is the practice of crafting and structuring inputs to LLMs to elicit the desired output behavior. While LLMs are powerful, their responses are sensitive to how instructions are framed, what context is given, and in what order.

Example:

A generic prompt: "Summarize this document,” may yield vague results. A refined prompt: "Summarize this document for a financial analyst looking for quarterly risk factors," produces domain-specific value.

Advanced Prompt Techniques: Chain-of-thought prompting, zero-shot reasoning, output templating, and system instructions.

LangChain and Orchestration Frameworks

While LLMs handle text generation, LangChain, Haystack, and similar orchestration frameworks act as the “middleware” that connects models, tools, APIs, databases, and user-facing applications.

These frameworks enable:

- Agent-style workflows (e.g., multi-step reasoning).

- Tool calling (e.g., search + calculator + API fetch).

- Data chaining (e.g., RAG pipelines, memory buffers).

- Context injection (e.g., dynamic prompt construction).

If LLMs are engines, orchestration frameworks are the runtime layer that wires together input sensors, control logic, and output interfaces.

Enterprise Use Cases

Inventory Forecasting: Dynamic Demand Modeling at Scale

What the AI does:

Machine learning models, which are usually time-series models or sequence predictors that employ the transformer architecture, study behaviors in historical data, seasonality, supply chain behaviors, and external signals (such as weather or market trends etc.), estimating inventory demand at various SKU, location, or warehouse levels.

What problem it solves:

Understocking leads to lost revenue. Overstocking increases holding costs and waste. AI-powered systems cut out much of this mismatch—McKinsey’s analysts report forecasting errors were cut by 20-50%, reducing the dissatisfactions of lost sales and lost products by up to 65%, falling warehouse costs by 5-10% and slashing costly admin by a quarter to over a third

Tools typically used:

- Models: Prophet (Meta), XGBoost, DeepAR (AWS), Temporal Fusion Transformers;

- Frameworks: TensorFlow, PyTorch;

- Platforms: Amazon Forecast, Databricks MLflow, custom deployments via AWS SageMaker.

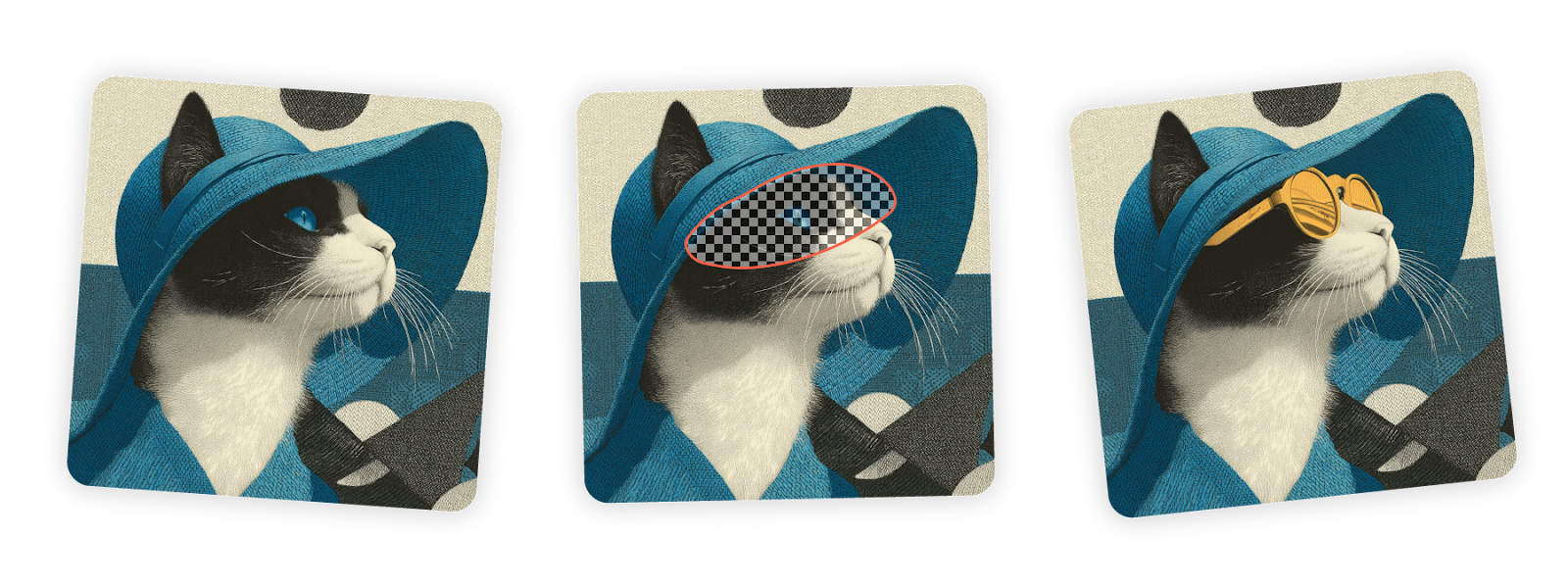

Visual Asset Generation: Accelerated Design Prototyping

What the AI does:

Generative models, such as diffusion models or GANs, are used to produce 2D and 3D visual assets, including product mockups, textures, concept art, and scene compositions. By conditional generation, we can guide the outputs with prompts, sketches, or templates.

Source: https://docs.midjourney.com/hc/en-us/articles/33329329805581-Modifying-Your-Creations

What problem it solves:

Manual asset generation is a long and costly process, especially for product design and game development pipelines. According to Gitnux, AI reduces iteration time: 68% of design agencies report a project turnaround reduction of at least 30%; 45% note a decrease in manual workload

Tools typically used:

- Models: Stable Diffusion, DALL·E, Midjourney (via API integrations);

- 3D Tools: NVIDIA GET3D, Kaedim AI, Meshcapade;

- Pipeline integrations: Blender + ControlNet, Unity or Unreal Engine with AI-assisted import.

AI Game Assistants: Dynamic NPC Behavior

What the AI does:

Large language models and behavior trees are used to drive more dynamic non-player character (NPC) dialogues, reactions, and decision-making. Some approaches include RAG for real-time story context retrieval and for continual learning of behavior.

What problem it solves:

Static NPC scripting limits replayability and immersion. AI-driven NPCs can respond contextually to player actions, enabling more lifelike social dynamics, procedural storytelling, and emergent gameplay without exponential authoring overhead.

Tools typically used:

- LLMs: GPT-4, Claude, LLaMA (fine-tuned on in-game lore);

- Frameworks: LangChain for memory/context management, Unity ML-Agents;

- Tools: Inworld AI, Convai, Replica Studios for voice + dialogue logic.

Document Summarization: Legal and Financial Data Abstraction

What the AI does:

Transformer models perform extractive or abstractive summarization of dense documents—contracts, financial reports, policy manuals—distilling pages of content into key clauses, risk statements, or executive summaries.

What problem it solves:

Professionals spend hours reviewing complex, repetitive documents. AI summarization speeds up due diligence, reporting, and compliance workflows while reducing human error in repetitive parsing tasks.

Tools typically used:

- Models: OpenAI GPT-4, Google T5, Longformer, Claude 2 (for longer context windows);

- Pipelines: RAG with vector DBs (e.g., Pinecone), summary templating via prompt engineering;

- Platforms: Azure AI Document Intelligence, private GPT deployments.

Predictive Maintenance: Preventing Downtime with Sensor Intelligence

What the AI does:

ML models trained on sensor data (vibration, temperature, electrical signals) detect patterns leading up to equipment failure. Models predict the Remaining Useful Life (RUL) of machinery and recommend proactive interventions.

What problem it solves:

Unexpected equipment failures cause costly downtime. Traditional maintenance schedules are either too rigid or too late. Predictive systems enable condition-based maintenance, improving uptime and resource planning.

Tools typically used:

- Models: LSTMs, Random Forests, CNNs for signal data;

- Frameworks: Edge AI with TensorFlow Lite, PyTorch Mobile, AWS Greengrass;

- Platforms: Siemens MindSphere, Azure IoT Hub, IBM Maximo.

Common Pitfalls and How to Avoid Them

Despite powerful tools and a well-defined use case, AI development in the enterprise is hardly frictionless. Following are the most typical technical and operational traps—along with actionable tricks to derail them before they sabotage success, confidence or ROI.

Data Quality Issues

Artificial intelligence models are only as good as the data they are trained or grounded in. There can be imbalanced distributions, noises, missing values, and inconsistent formats, which make the accuracy of model performance unreliable. There also exist silent failures in production settings due to drift in the data, i.e., the distribution shift in data over time (e.g., seasonality, user behavior changes). According to Gartner, poor data quality costs organizations an average of $12.9 million annually.

Source: https://www.trootech.com/blog/ai-development-cost

How to avoid it:

- Invest in data profiling and cleansing pipelines early.

- Use automated labeling tools with human-in-the-loop feedback for quality assurance.

- Enforce schema validation and distribution monitoring to detect drift.

- Consider weak supervision frameworks like Snorkel to balance coverage and accuracy in labeling.

Overfitting

Overfitting means a model can perform well on the training data but badly on some new, unseen inputs. This can be particularly problematic in high-dimensional data or when you work with small datasets, two of the key characteristics in business domains where data is proprietary.

Overfitting models can deceive teams with high validation accuracy that then quietly underperform in the field. In high-stakes fields such as health care or finance, this can result in regulatory risk or operational breakdown.

How to avoid it:

- Use cross-validation, regularization techniques, and early stopping.

- Apply dropout, data augmentation, or model ensembling.

- Monitor the generalization gap during training and track real-world inference performance post-deployment.

- Prioritize interpretable models or add explainability layers (e.g., SHAP, LIME) to surface potential blind spots.

Latency Bottlenecks

AI systems, especially those relying on LLMs or RAG pipelines, often involve multiple steps of API calls, retrieval, and processing. This introduces latency which may not be ideal for real-time applications such as chatbot, virtual assistants, fraud detection, etc.

Forbes mentions that 40% of people give up on digital interactions that last longer than three seconds, and companies that implement sluggish AI systems must overcome internal resistance and declining usage.

How to avoid it:

- Use quantized models (e.g., GPT-4-Turbo, LLaMA 3 8B) for low-latency inference.

- Cache common queries and retrievals via embedding fingerprints or local vector DBs.

- Deploy models to edge or regional servers to reduce round-trip times.

- Monitor latency metrics across pipelines using observability platforms like Arize AI or WhyLabs.

Privacy and Security

Enterprise AI systems often process sensitive data: PII, financials, contracts. Improper handling during training, inference, or logging can create attack surfaces, violate regulations (e.g., GDPR, HIPAA), or expose businesses to legal risk.

How to avoid it:

- Apply differential privacy, anonymization, or synthetic data where possible.

- Choose vendors that support data residency, encryption at rest/in transit, and granular access control.

- Isolate inference layers that process sensitive data and strip logs of identifiable information.

- Conduct AI-specific threat modeling to anticipate prompt injection, model inversion, or poisoning attacks.

Conclusion

Now AI is not confined anymore to research labs or innovation teams and is present in production systems, business processes, and strategic planning. Business leaders need to know about the nuts and bolts of AI development not only for the sake of intellectual curiosity but also for the purpose of operations. From the reasoning behind retrieval-augmented pipelines to the subtleties in prompt engineering, being able to think about how these systems operate enables teams to scope more useful use cases, steer clear of common pitfalls, and evaluate build vs. buy decisions clearly.

This is just the superficial layer, though. From LLM fine-tuning and multi-agent systems to multi-modal architecture and vector-native applications, there is much that would be game-changing if scaled, and each deserves its own briefing.

Stay ahead of the curve. Subscribe to our AI Insight Digest to receive future briefings on advanced AI architectures, orchestration frameworks, and emerging enterprise use cases, delivered in clear, actionable language built for technical leaders.

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Suspendisse varius enim in eros elementum tristique. Duis cursus, mi quis viverra ornare, eros dolor interdum nulla, ut commodo diam libero vitae erat. Aenean faucibus nibh et justo cursus id rutrum lorem imperdiet. Nunc ut sem vitae risus tristique posuere. uis cursus, mi quis viverra ornare, eros dolor interdum nulla, ut commodo diam libero vitae erat. Aenean faucibus nibh et justo cursus id rutrum lorem imperdiet. Nunc ut sem vitae risus tristique posuere.

Reply